Approaches on network vertex embedding

-

摘要: 网络是一种常用的数据结构, 在社交、通信和生物等领域广泛存在, 如何对网络顶点进行表示是学术界和工业界广泛关注的难点问题之一. 网络顶点表示学习旨在将顶点映射到一个低维的向量空间, 并且能够保留网络中顶点间的拓扑结构. 本文在分析网络顶点表示学习的动机与挑战的基础上, 对目前网络顶点表示学习的主流方法进行了详细分析与比较, 主要包括基于矩阵分解、基于随机游走和基于深度学习的方法, 最后介绍了衡量网络顶点表示性能的方法.Abstract: Network is a commonly used data structure, which is widely applied in social network, communication and biological fields. Thus, how to represent network vertices is one of the difficult problems that is widely concerned in academia and industry. Network vertex representation aims at learning to map each vertex into a vector in a low-dimensional space, and simultaneously preserving the topology structure between vertices in the network. Based on the analysis of the motivation and challenges of network vertex representation, this paper analyzes and compares the mainstream methods of network vertex representation in detail, including matrix decomposition, random walk and deep learning based approaches, and finally introduces the methods to measure the performance of network vertex representation.

-

Key words:

- network embedding /

- random walk /

- matrix factorization /

- deep neural network

-

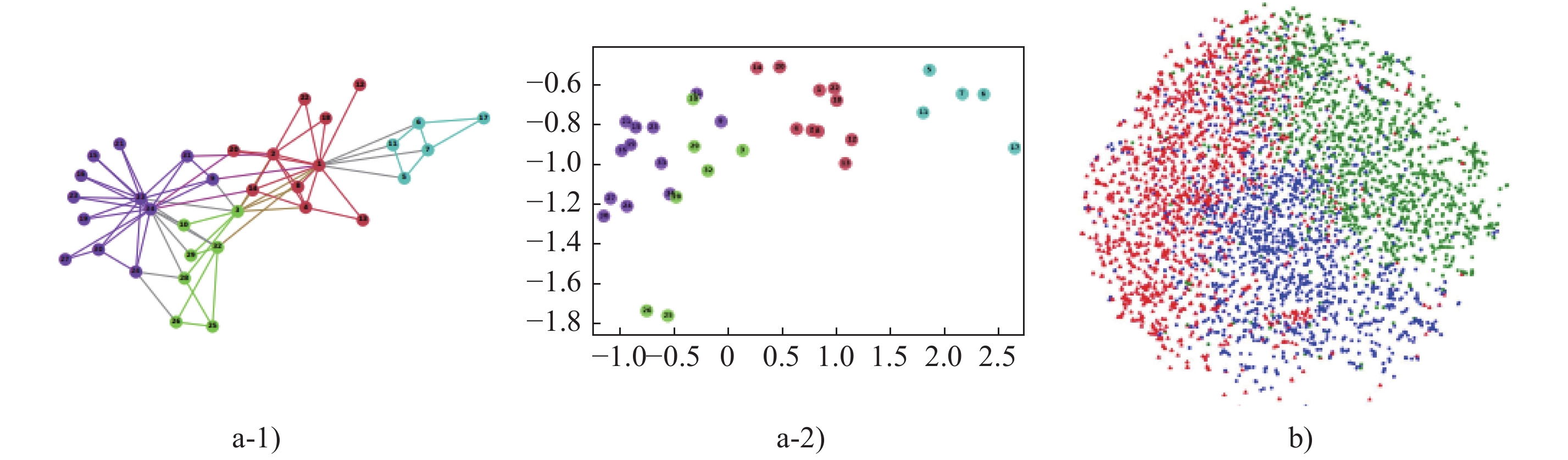

图 1 网络一阶、二阶相似性示例[3]

Fig. 1 An example of first-order proximity and second-order proximity

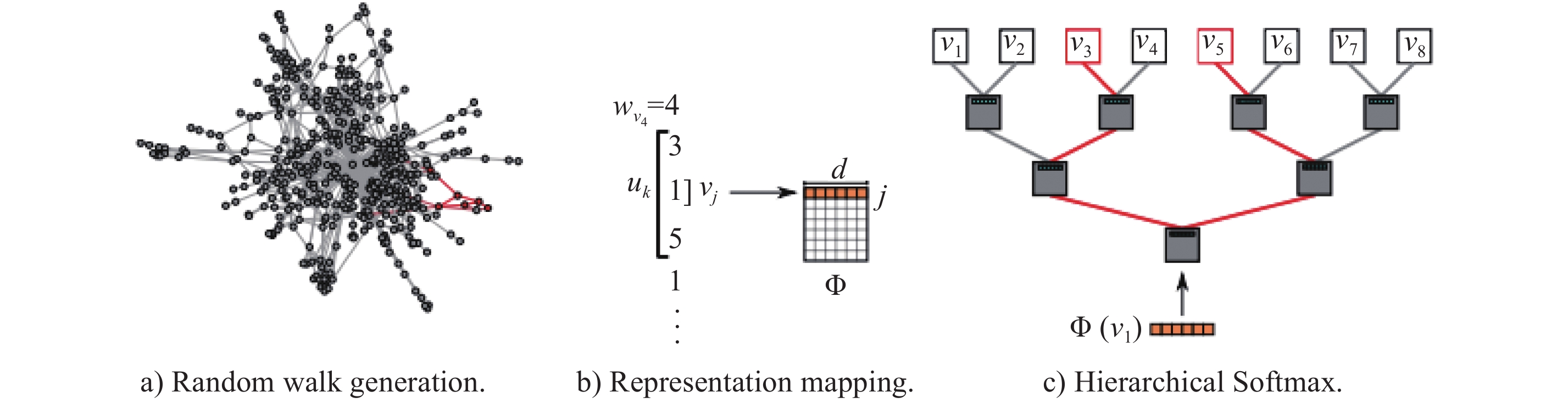

图 2 DeepWalk算法流程[15]

Fig. 2 Workflow for the DeepWalk algorithm

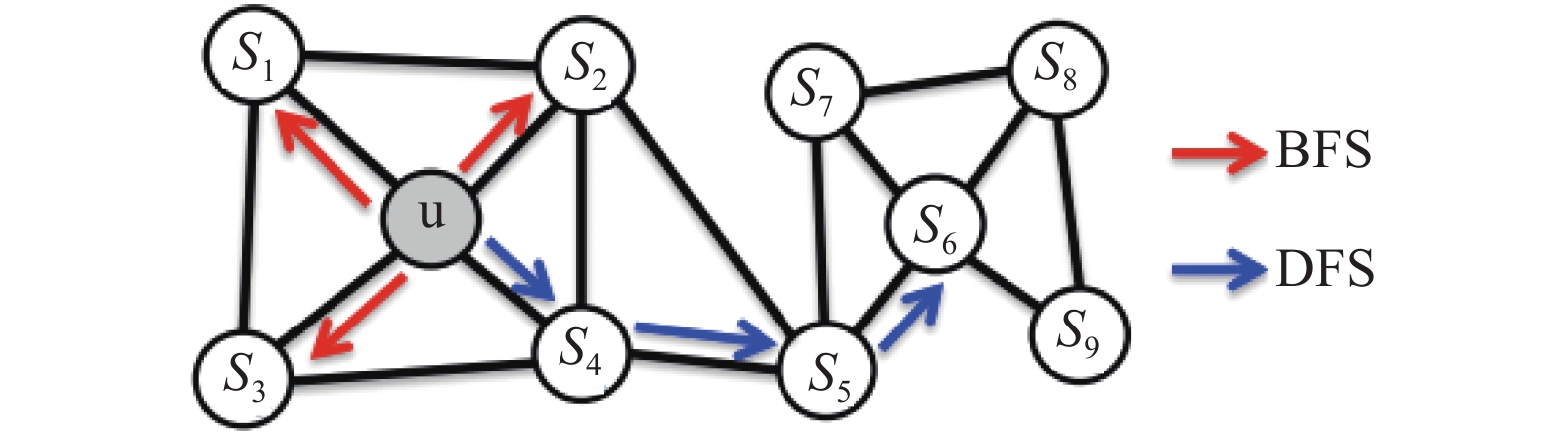

图 3 网络的两种搜索策略[17]

Fig. 3 BFS and DFS search strategies

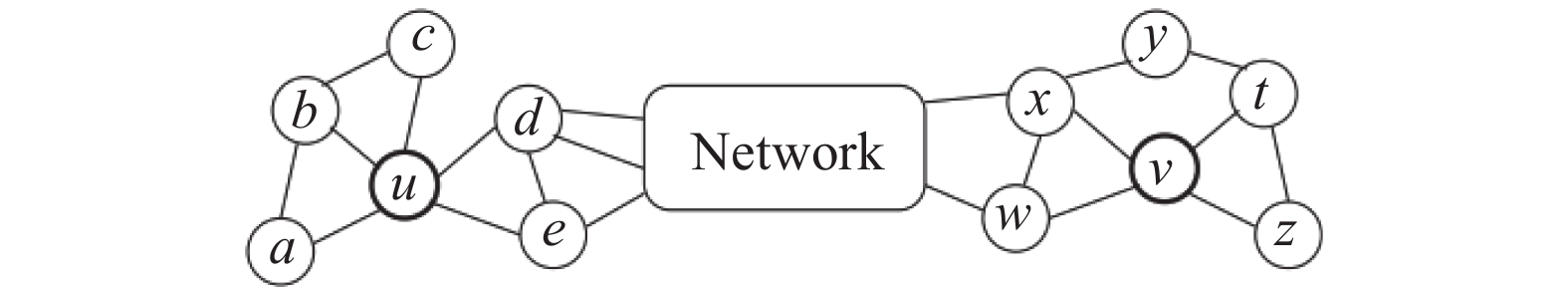

图 4 相距远且结构相似的顶点 u 和 v 示例[19]

Fig. 4 Nodes u and v are structurally similar but far apart in the network

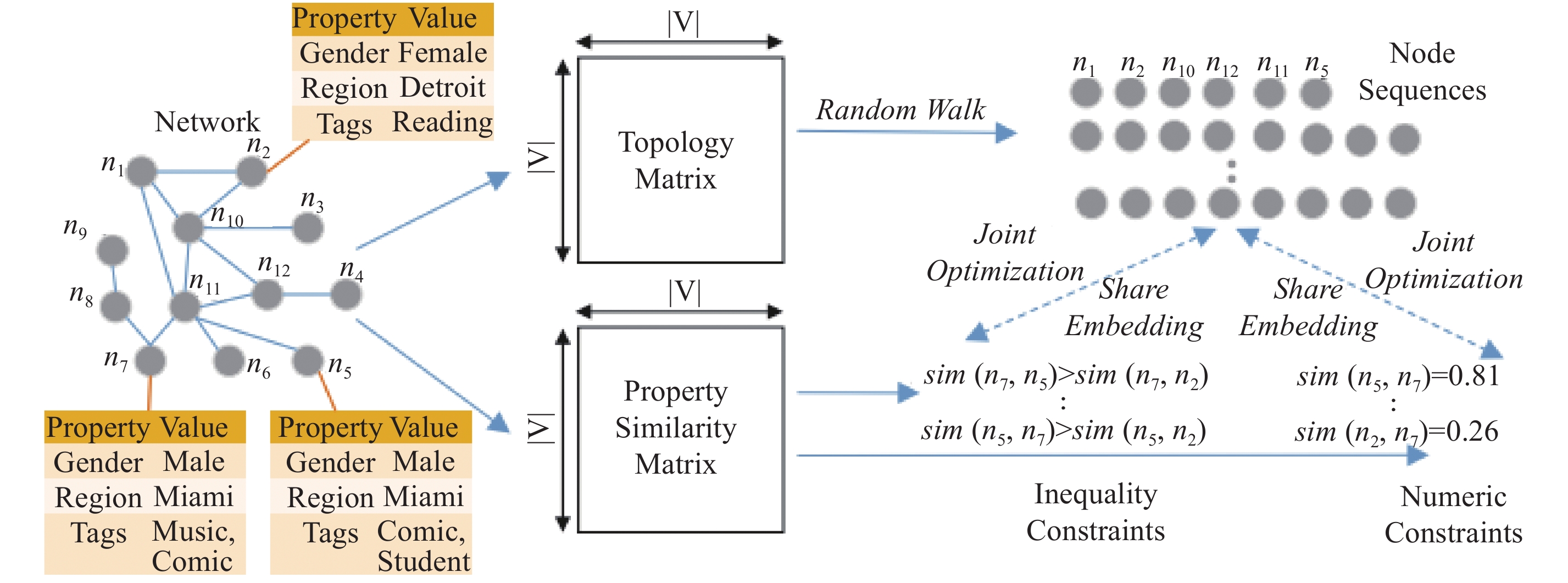

图 5 PPNE框架[20]

Fig. 5 The framework of PPNE

图 6 SDNE框架[22]

Fig. 6 The framework of SDNE

图 7 SiNE框架[25]

Fig. 7 The framework of SiNE

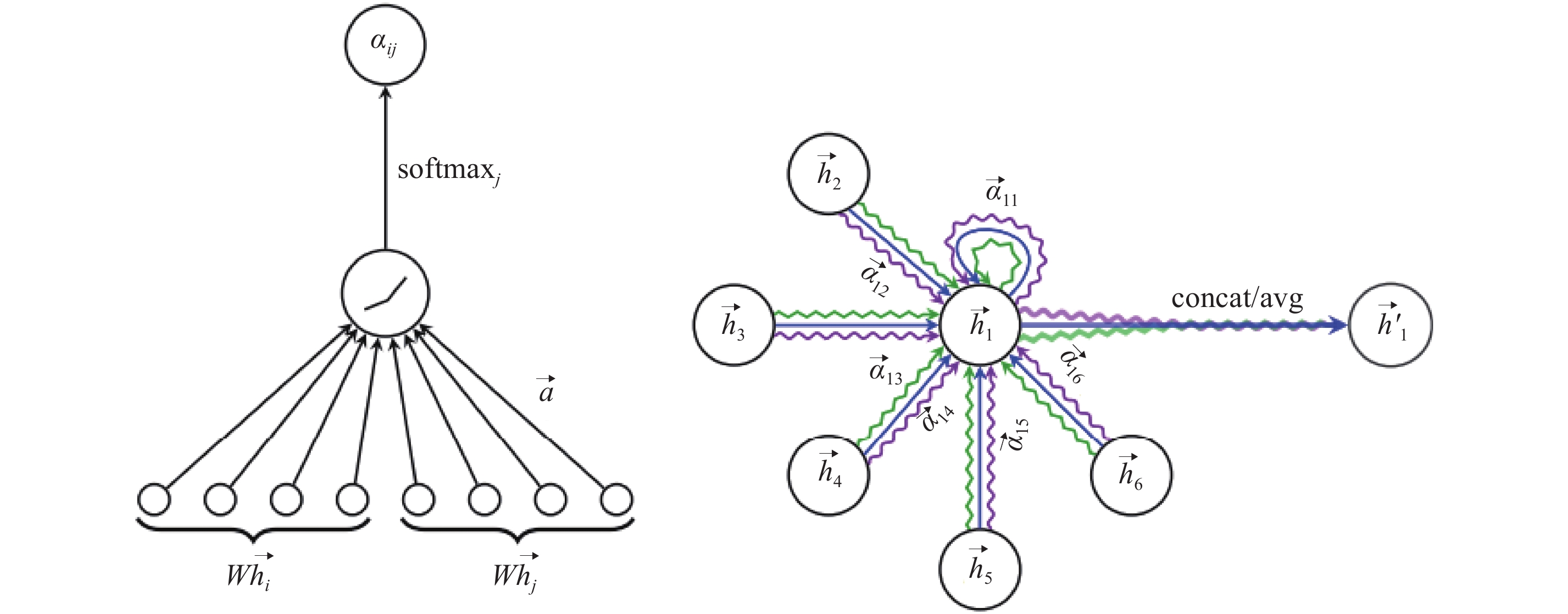

图 8 图注意力机制(左图)和多头注意力(右图)[31]

Fig. 8 Illustration of the attention mechanism (left) and multi-head attention (right)

-

[1] FREEMAN L C. Visualizing social networks [J]. Social Network Data Analytics, 2000, 6(4): 411-429. [2] THEOCHARIDIS A, DONGEN S V, ENRIGHT A J, et al. Network visualization and analysis of gene expression data using BioLayout [J]. Nature Protocol, 2009, 4(10): 1535-1550. [3] TANG J, QU M, WANG M, et al. LINE: Large-scale information network embedding [C]// The Web Conference. 2015: 1067-1077. [4] LIBENNOWELL D, KLEINBERG J. The link-prediction problem for social networks [J]. Journal of the Association for Information Science and Technology, 2007, 58(7): 1019-1031. [5] SEN P, NAMATA G, BILGIC M, et al. Collective classification in network data [J]. Ai Magazine, 2008, 29(3): 93-106. [6] HERMAN I, MELANCON G, MARSHALL M S, et al. Graph visualization and navigation in information visualization: A survey [J]. IEEE Transactions on Visualization and Computer Graphics, 2000, 6(1): 24-43. [7] ROWEIS S T, SAUL L K. Nonlinear dimensionality reduction by locally linear embedding [J]. Science, 2000, 290(5500): 2323-2326. [8] BELKIN M, NIYOGI P. Laplacian eigenmaps and spectral techniques for embedding and clustering [C]// Neural Information Processing Systems. 2001: 585-591. [9] CAO S, LU W, XU Q, et al. GraRep: Learning graph representations with global structural information [C]// Conference on Information and Knowledge Management. 2015: 891-900. [10] OU M, CUI P, PEI J, et al. Asymmetric transitivity preserving graph embedding [C]// Knowledge Discovery and Data Mining. 2016: 1105-1114. [11] MOUSAZADEH S, COHEN I. Embedding and function extension on directed graph [J]. Signal Processing, 2015, 111: 137-149. [12] MIKOLOV T, SUTSKEVER I, CHEN K, et al. Distributed representations of words and phrases and their compositionality [C]// Neural Information Processing Systems. 2013: 3111-3119. [13] MIKOLOV T, CHEN K, CORRADO G S, et al. Efficient estimation of word representations in vector space [C]// International Conference on Learning Representations. 2013. [14] MIKOLOV T, YIH W, ZWEIG G, et al. Linguistic regularities in continuous space word representations [C]// North American Chapter of the Association for Computational Linguistics. 2013: 746-751. [15] PEROZZI B, ALRFOU R, SKIENA S, et al. DeepWalk: Online learning of social representations [C]// Knowledge Discovery and Data Mining. 2014: 701-710. [16] YANG J, LESKOVEC J. Overlapping communities explain core-periphery organization of networks [J]. Proceedings of the IEEE, 2014, 102(12): 1892-1902. [17] GROVER A, LESKOVEC J. Node2vec: Scalable feature learning for networks [C]// Knowledge Discovery and Data Mining. 2016: 855-864. [18] NARAYANAN A, CHANDRAMOHAN M, CHEN L, et al. Subgraph2vec: Learning distributed representations of rooted sub-graphs from large graphs [C]// ArXiv: Learning. 2016. [19] RIBEIRO L F, SAVERESE P H, FIGUEIREDO D R, et al. Struc2vec: Learning node representations from structural identity [C]// Knowledge Discovery and Data Mining. 2017: 385-394. [20] LI C, WANG S, YANG D, et al. PPNE: Property preserving network embedding [C]// Database Systems for Advanced Applications. 2017: 163-179. [21] YANG C, LIU Z, ZHAO D, et al. Network representation learning with rich text information [C]// International Conference on Artificial Intelligence. 2015: 2111-2117. [22] WANG D, CUI P, ZHU W, et al. Structural deep network embedding [C]// Knowledge Discovery and Data Mining. 2016: 1225-1234. [23] CHANG S, HAN W, TANG J, et al. Heterogeneous network embedding via deep architectures [C]// Knowledge Discovery and Data Mining. 2015: 119-128. [24] TANG J, CHANG S, AGGARWAL C C, et al. Negative link prediction in social media [C]// Web Search and Data Mining. 2015: 87-96. [25] WANG S, TANG J, AGGARWAL C C, et al. Signed network embedding in social media [C]// Siam International Conference on Data Mining. 2017: 327-335. [26] HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition [C]// Computer Vision and Pattern Recognition. 2016: 770-778. [27] KIPF T, WELLING M. Semi-supervised classification with graph convolutional networks [C]// ArXiv: Learning. 2016. [28] DEFFERRARD M, BRESSON X, VANDERGHEYNST P, et al. Convolutional neural networks on graphs with fast localized spectral filtering [C]// Neural Information Processing Systems. 2016: 3844-3852. [29] NIEPERT M, AHMED M H, KUTZKOV K, et al. Learning convolutional neural networks for graphs [C]// International Conference on Machine Learning. 2016: 2014-2023. [30] BAHDANAU D, CHO K, BENGIO Y, et al. Neural machine translation by jointly learning to align and translate [C]// ArXiv: Computation and Language. 2014. [31] VELICKOVIC P, CUCURULL G, CASANOVA A, et al. Graph attention networks [C]// ArXiv: Machine Learning. 2017. [32] BHAGAT S, CORMODE G, ROZENBAUM I, et al. Applying link-based classification to label blogs [C]// Web Mining and Web Usage Analysis. 2009: 97-117. [33] PEARSON K. LIII. On lines and planes of closest fit to systems of points in space [J]. Philosophical Magazine Series 1, 1901, 2(11): 559-572. [34] DER MAATEN L V, HINTON G E. Visualizing data using t-SNE [J]. Journal of Machine Learning Research, 2008, 9(11): 2579-2605. -

下载:

下载: